Will an RCT Change Anyone’s Mind? Should It?

By Mauricio Romero, Justin Sandefur and Wayne Aaron Sandholtz

Editor’s note: This cross-post originally appeared on the Center for Global Development blog

We respond to critics of our evaluation of Liberia’s “partnership” school program, distinguishing legitimate concerns about the charter-style program itself—which can be turned into testable hypotheses—from methodological limitations to what an impact evaluation can show.

The three of us are running a randomized control trial of the "Partnership Schools for Liberia" (PSL) program, which is President Ellen Johnson Sirleaf's effort to introduce something akin to American-style charter schools or the UK’s academies to Liberia's underperforming education system. Charter schools are controversial almost everywhere, and in some circles, so are randomized trials.

Recently, two advocacy groups—Action Aid and Education International—circulated a call for proposals offering researchers €30,000 to "conduct an in-depth qualitative investigation" of the partnership school program. The document explicitly seeks to dismiss the RCT results before the study has been concluded, asserting that "there are serious concerns over whether the evaluation of [the program] can be truly objective or generate any useful learning."

Of course, we object to this blanket dismissal of evidence, and below we offer a point-by-point response to the criticisms. Unfortunately, Action Aid and Education International have drawn their policy conclusions before collecting data. Their call for proposals notes that the research they fund will inform a "campaign against the increasing privatisation and commercialisation of education" and that "the report will be used for advocacy work… to challenge privatisation trends." We agree that what these organizations describe is advocacy not research, as their conclusions are openly advertised in advance.

We also agree with Action Aid and Education International on more substantive matters. The partnership school initiative may fail. And many of the criticisms raised below rest on sound factual premises. The key difference is that we do not read these points as criticisms of our study. Rather, many of the points below are concerns over the program itself, many of which we share—and we believe Liberia’s Minister of Education, George K. Werner, shares them as well, which is why he commissioned a randomized evaluation. We would reframe these concerns as hypotheses that we have explicitly designed the randomized evaluation to test. In some instances, the "criticisms" restate the core motivation for the RCT.

Here are Action Aid and Education International's criticisms, verbatim and unedited (though re-ordered to group similar comments together), with our responses.

1. Partnership schools are very expensive to run

"PSL schools are receiving significantly more funding ($50 per child enrolled) than the government control schools against which they will be compared."

"PSL schools receive significantly more political and managerial attention from the Ministry."

True! Unlike normal government primary schools, the Ministry decreed that PSL schools must be free at all grade levels, including early childhood education. Providing free services costs money, as do all the books, teacher training, and other things PSL provides. Crucially, so far all of the extra money spent on the program has been paid by philanthropic donors, not the Government of Liberia.

The fact that the program costs money is not, in and of itself, a failing of the RCT. If a clinical trial of a new drug that costs $1,000 per patient reduces the incidence of heart disease by 20 percent, we wouldn't declare the study a failure. Rather, we would evaluate the drug based on its comparative cost-effectiveness. Are there cheaper ways to achieve the same 20 percent reduction in heart disease? If so, doctors should prescribe those instead.

The same logic applies to partnership schools in Liberia. Even if the RCT shows learning gains, it remains to be asked whether the gains justify the cost of the program.

Two complications arise in our case. First, very few alternative "treatments" to increase learning in Liberia have been rigorously tested. In a perfect world, we could test alternative interventions head-to-head with partnership schools as part of our evaluation. If other aid donors who are spending heavily on Liberian education would like to subject their programs to that head-to-head comparison, we would love to include them. (Seriously, USAID, EU, and GPE: call us.)

The second complication is that measuring the costs of this program is very tricky. Philanthropists have poured millions into these schools, on the (somewhat optimistic) assumption that these costs will be amortized over many years and held constant under a hypothetical expansion of the program. In economics terminology, donors agreed to pay for long-term investments that represent fixed costs for PSL regardless of its scale, and operators claim their per pupil operating (or variable) costs are quite low. As evaluators, we are reluctant to take implementers' word at face value when they report very high fixed costs and claim very low variable costs, just as we would not take their word at face value if they claimed big benefits from their program without proof. More to come on this in the coming months.

2. Private operators may (illegally) try to exclude slower students

"PSL schools have been allowed to cap class sizes at 45—so have smaller classes than control schools (and many children previously enrolled—probably those from more disadvantaged backgrounds—have thus been excluded at short notice)."

"There is some evidence of providers being selective of children: those enrolled came on a “first come first served basis” but information available to parents is asymmetrical (better off parents get there first). All children in Bridge schools were also assessed and potentially re-graded."

It is true that Bridge International Academies, which runs 24 of the 94 PSL schools, has special permission to cap class sizes at 55 (not 45) pupils, while other operators may cap classes at 65 pupils—though some allow larger class sizes in practice.. To be clear, selective admissions based on fees or academic aptitude are explicitly forbidden by the PSL rules, and enrollment should be on a first-come-first-served basis.

Are operators abiding by these rules, and are poorer kids really getting equal access? This is one of the core concerns that the RCT was designed to address; and we would argue this issue highlights a strength rather than a weakness of the evaluation.

The key to our strategy here is something called intention-to-treat analysis. The basic idea is to track pupils who were in a given school before it was known whether the school would be part of the PSL program. That means we follow pupils who stay in school as well as those who leave or get kicked out. All of those kids who were in a PSL school last year count as part of the treatment group for the evaluation. So any operator who thinks they can boost their evaluation performance by rejecting weak students is woefully mistaken. It also means that we can track exactly who gets included and who gets excluded, and test whether poorer or slower pupils lose out, as alleged.

What we know so far is that despite the enrollment caps in PSL schools, they have boosted enrollment significantly relative to regular government schools. Free tuition seems to be popular, and there was apparently some excess capacity in government schools that could be filled once PSL started.

It remains to be seen in ongoing analysis of our data whether the new students flocking to PSL schools are (a) richer or better prepared, and (b) whether they were previously unenrolled, or are transferring from other public schools.

3. This model can't scale

"Some providers have been allowed to set conditions on which schools they would take over (e.g. Bridge insisted on schools on accessible roads, in clustered locations with electricity and good internet connectivity. Such conditions are highly atypical)"

”There is also evidence of selectivity of teachers and principals (with providers being allowed to remove those they do not consider good enough (who are transferred to other public schools)"

This is the first we've heard about demanding electricity in advance, but the other points are correct—particularly the demand for 2G internet connectivity in Bridge schools (as well as Omega schools) and reassignment of teachers. All of the private “partners” in the Liberia program had some say about where they would operate, and all had some ability to re-assign teachers who were unable to pass a Ministry test. Note that all partnership schools in Liberia take on the unionized, civil service teachers already working in the school, and those teachers cannot be fired.

In evaluation jargon, both of these concerns speak to a possible gap between internal validity and external validity.

The RCT is internally valid in that it will provide a reliable estimate of the effect on learning outcomes for a given population of students of converting a normal public school into a partnership school during the 2016/17 pilot. This in and of itself is policy-relevant information. The pilot is small (less than 3 percent of public schools), yet over 18 percent of the population is within 5 KM of a PSL school. But the bigger policy question in Liberia is whether converting even more regular government schools into partnership schools would raise scores.

The schools in the pilot have had a leg up because they could re-assign underperforming teachers and recruit new teachers from among recent training college graduates. We will continue to track teacher re-assignments through the evaluation, but data from the baseline shows that on average only one teacher per school from the 2015/16 teacher roster has been re-assigned to another location, and this number is the same in both treatment and control schools. Bridge (representing just over a quarter of total treatment schools) is the exception here, having on average 3 re-assignments per school.

Much like the issue of their funding advantage, the question is whether this privilege could be extended to many more schools if the program was expanded. If this is a zero-sum game of shuffling under-qualified teachers around the country, that's cause for concern—an issue we have planned to measure in the evaluation.

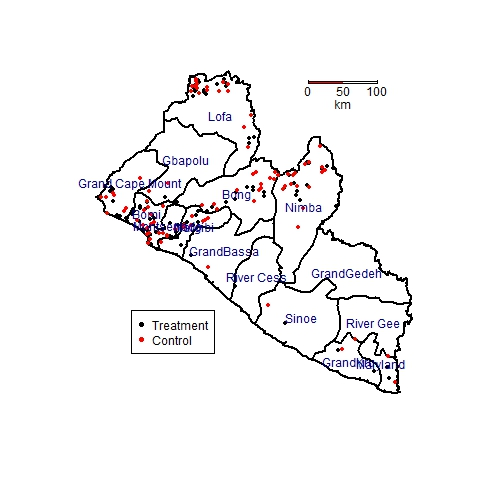

Similarly, if partnership schools simply picked the low-hanging fruit this year by working in easier environments, that also bodes ill for any future expansion. In reality, this is unclear. Some operators, like Bridge and to a lesser extent Omega, strongly insisted on working in a narrow range of schools with high infrastructure demands. But other operators volunteered to work in more remote areas with fewer resources at baseline (In all cases, treatment schools were randomized among a final list of eligible schools). The map below shows the distribution of partnership (i.e., treatment) and traditional public (control) schools across the country.

Overall, Liberia's partnership schools are not a random sample of the country, and they took over public schools which were somewhat larger and had better infrastructure than the national average. However, they are spread across 13 of Liberia's 15 counties, and are disproportionately concentrated outside Monrovia in poorer rural counties, so the pilot evaluation should give a good idea of whether this model can succeed in difficult environments.

4. Partnership schools will teach to the test

“The indicators used in the evaluation (likely to be variations on the EGRA / EGMA - Early Grade Reading / Maths Assessments) to measure literacy and numeracy are likely to be predictable to the private providers but less so to government schools (so there is a risk of distortion through teaching to the test)"

Teaching to the test is a valid concern in general, but not a serious concern for the evaluation for two reasons. First, we disagree that teaching to the test is a risk that is unique to partnership schools. There is little reason why private providers would be more likely than public school principals to conclude that the tests they'll be given for the midterm evaluation in 2017 might look similar to the tests they were given during the baseline survey in 2016. That said, we have explicitly reserved the right to change the learning assessment before then, or add entirely new modules, just to keep everyone guessing.

Conclusion

To sum up, we share many of the questions that have been raised by critics of Liberia’s partnership schools initiative. Indeed, the core concerns raised by advocacy organizations are a rephrasing of the central questions for the evaluation: is this model cost-effective relative to other possible education interventions, will these schools favor richer or better-prepared students, and how much value do these schools really add once we account for any differences in student composition? Our first round of follow-up survey data will be collected in June, and we can begin to answer those questions.

In the meantime, President Sirleaf and Minister Werner deserve credit for committing publicly to delaying any major expansion of the program until the evaluation is completed. Minister Werner said in a recent op-ed “…these are early days for PSL. While I believe it holds great potential, my team and I are clear that the program will not be scaled significantly until the data shows it works and we have the capacity within government to manage it effectively.”

We are not naïve enough to think that any kind of data will placate all critics of charter-style initiatives like this, but we do look forward to an ongoing debate that is increasingly disciplined by facts as data comes in from these pilot schools.